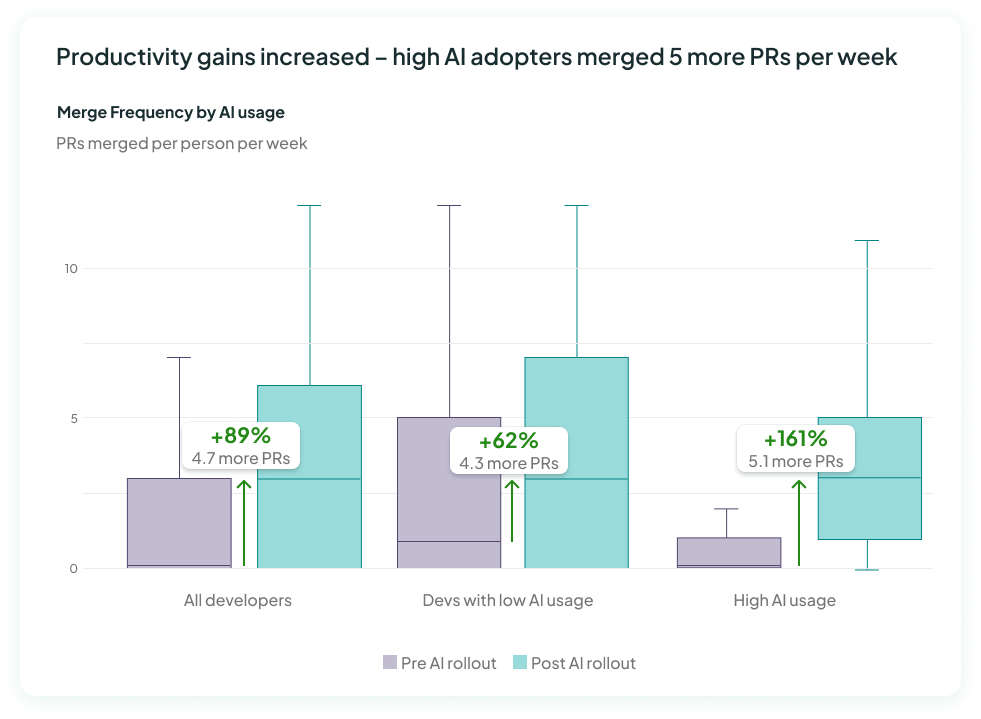

increase in Merge Frequency

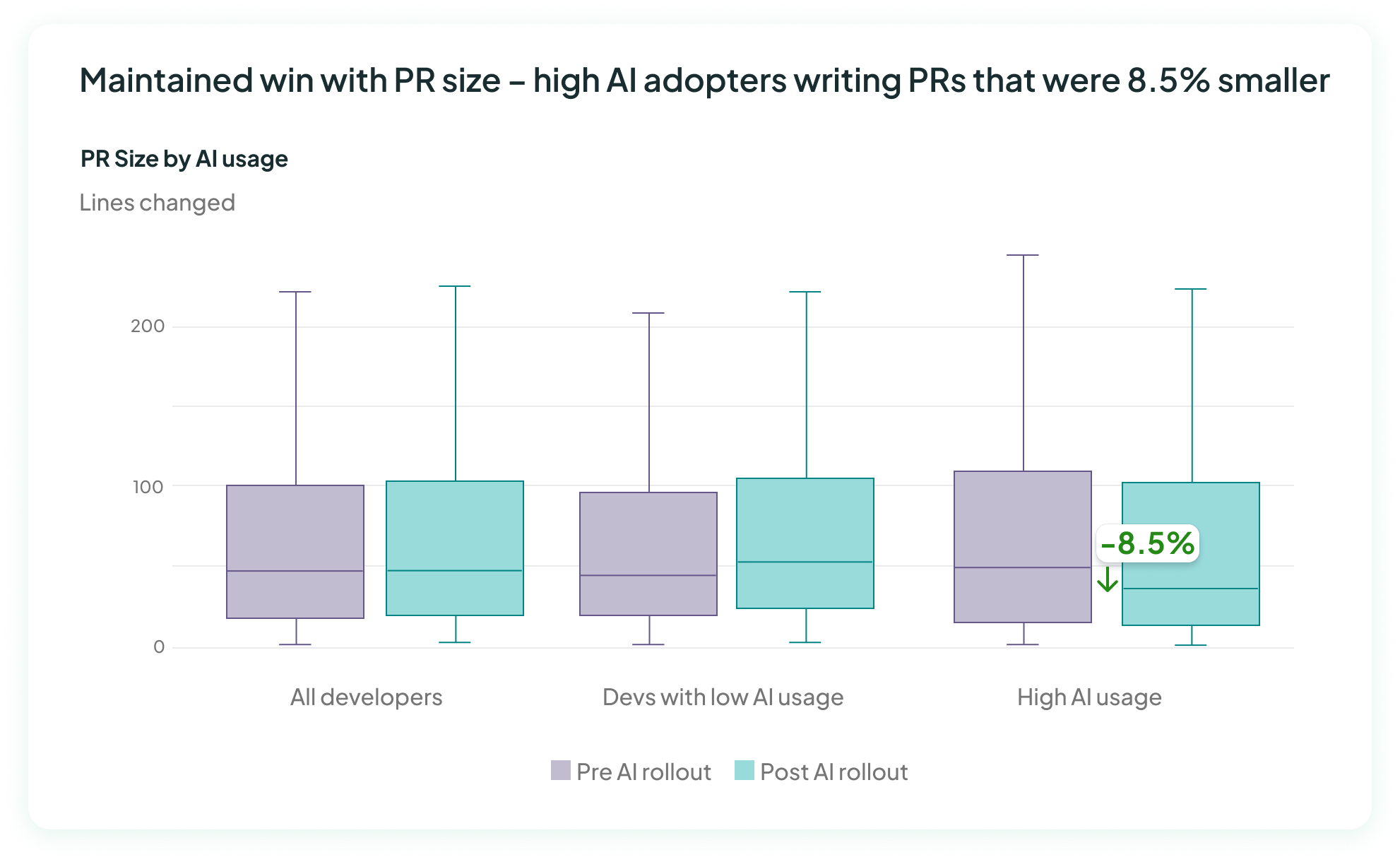

decrease in PR size

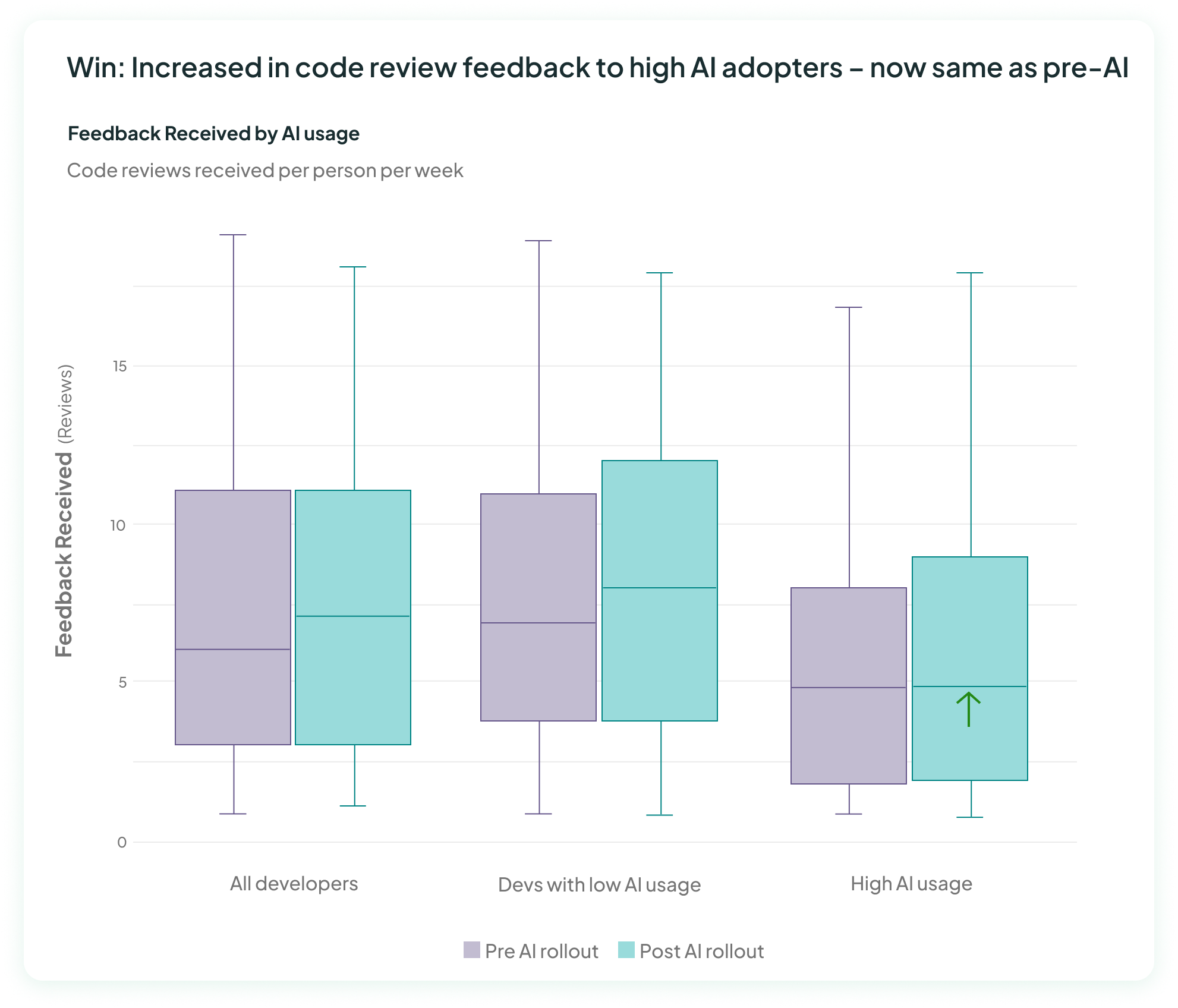

… while ensuring that AI PRs got enough reviews

By tracking their metrics on Multitudes, they were able to spot the leading indicators of how AI was impacting quality and double down on what was working well. Ultimately, their high AI adopters reduced PR sizes by 8.5% while merging 161% more PRs.

Eucalyptus rolled out AI coding tools to their engineering team in early 2025. Bronwyn Mercer, the Head of Security and Infrastructure, and Adrian Andreacchio, Platform Engineering Lead, were tasked with quantifying the impact of AI and ensuring that they got as much value as possible from these tools.

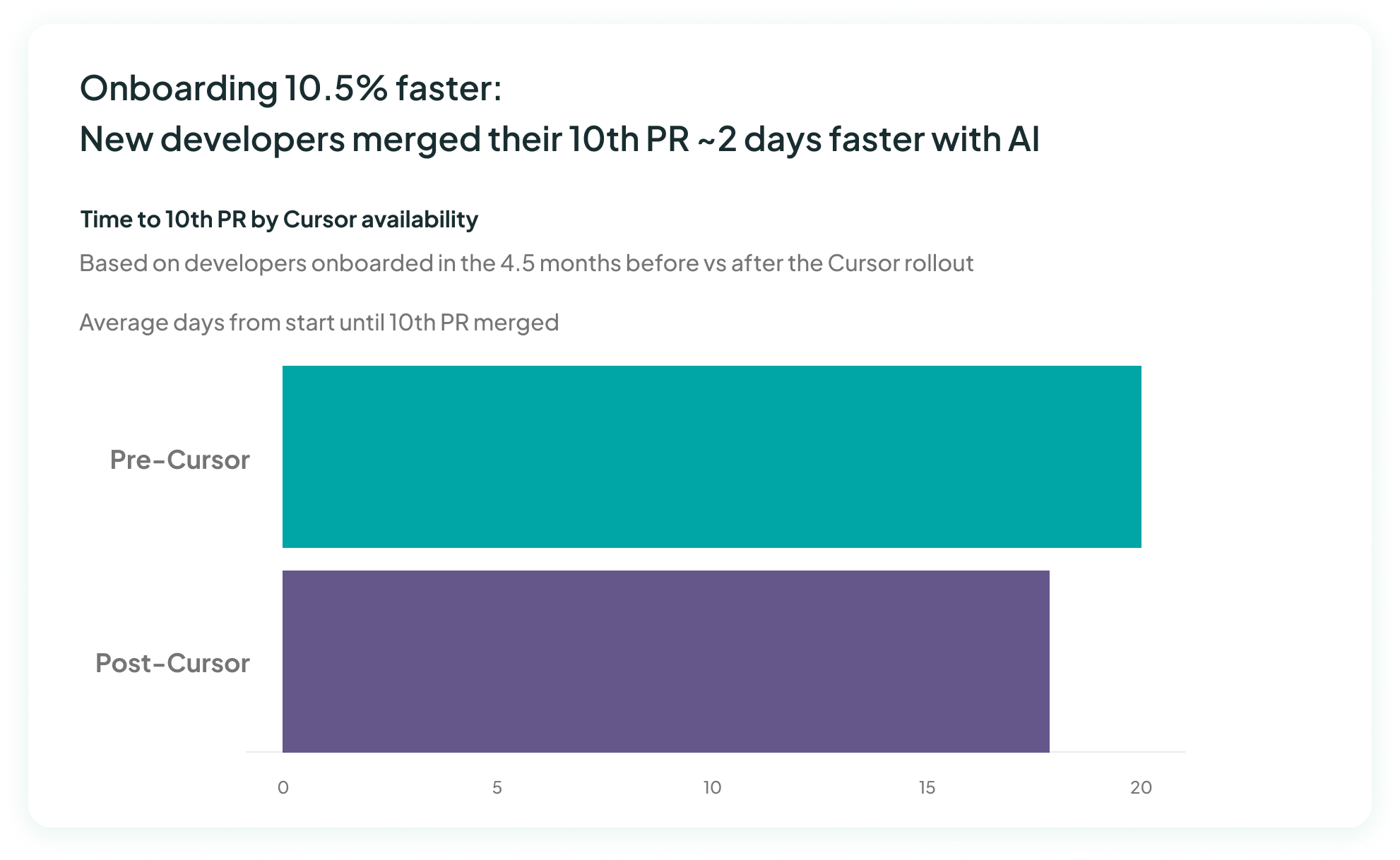

Eucalyptus, like many other tech companies, had set big goals around their use of AI. A key benefit they hoped to get from AI was speeding up the onboarding time for new developers – they were going through a big period of growth which meant their engineering team was set to double over the following year. A secondary goal from using AI was to increase developer productivity across the team.

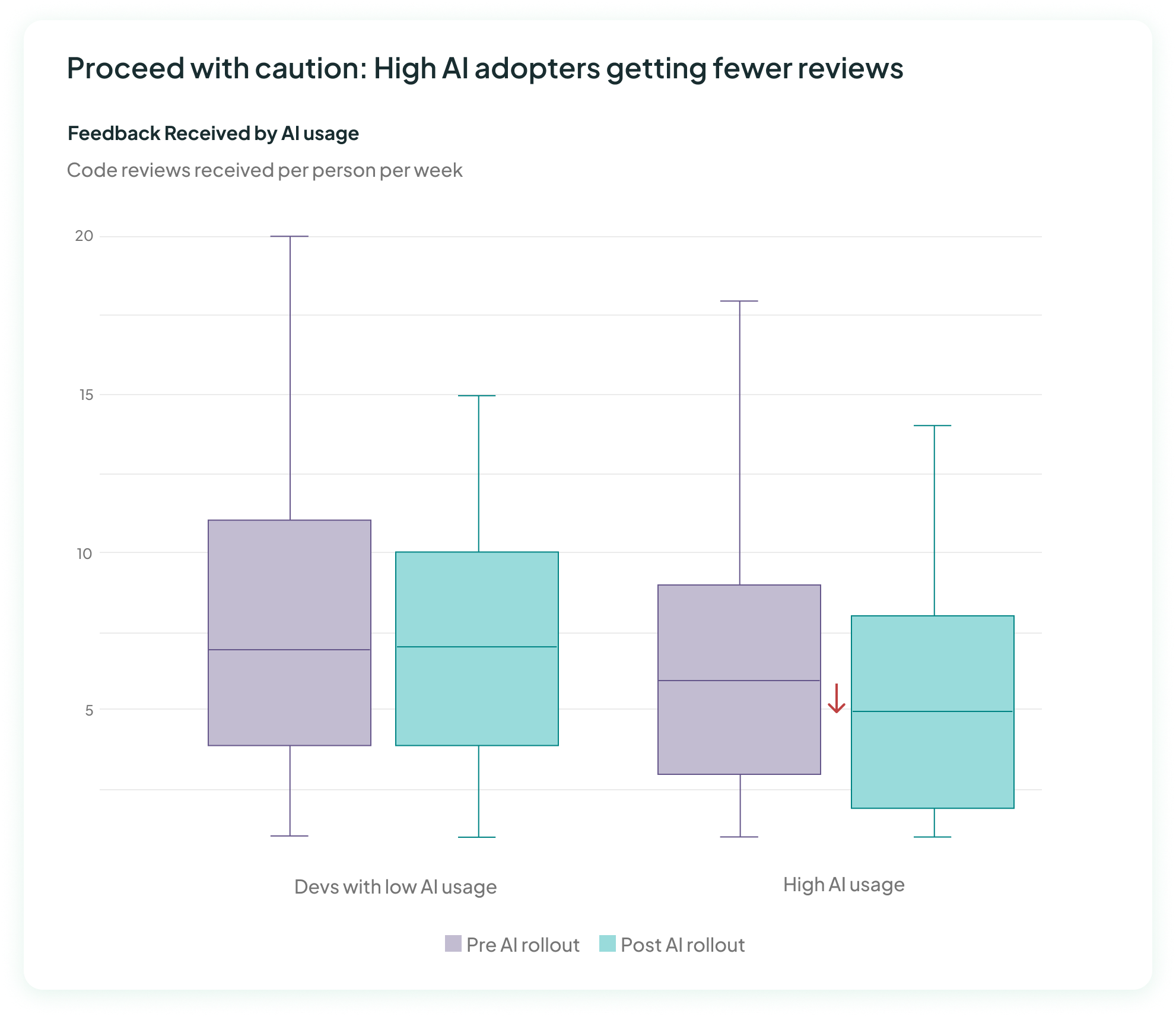

However, they were also aware of the issues with AI slop. Eucalyptus’s engineering team prides itself in having a high-quality codebase that’s quick and fun for developers to build on. Adrian and Bronwyn didn’t want AI speed to bring tech debt that would slow them down or degrade the developer experience. One concern was that AI slop could create bottlenecks in the code review process – with developers spending more time reviewing poor-quality AI code, or doing more rounds of editing to get an AI-written PR to the right quality level.

A second challenge was that the AI rollout was happening in the build-up to a major feature release – so Bronwyn and Adrian had to make sure that the team could still deliver quickly while learning AI tooling and managing AI slop. Their work was cut out for them!

To help them spot leading indicators of AI impact, Eucalyptus worked with Multitudes. The telemetry data from Multitudes meant that they could get low-effort, ongoing updates on the AI rollout without interrupting the flow of work.

The Multitudes data showed positive early results for productivity but mixed results on the qulaity side.

When we checked in on the rollout, Eucalyptus already had two positive productivity indicators:

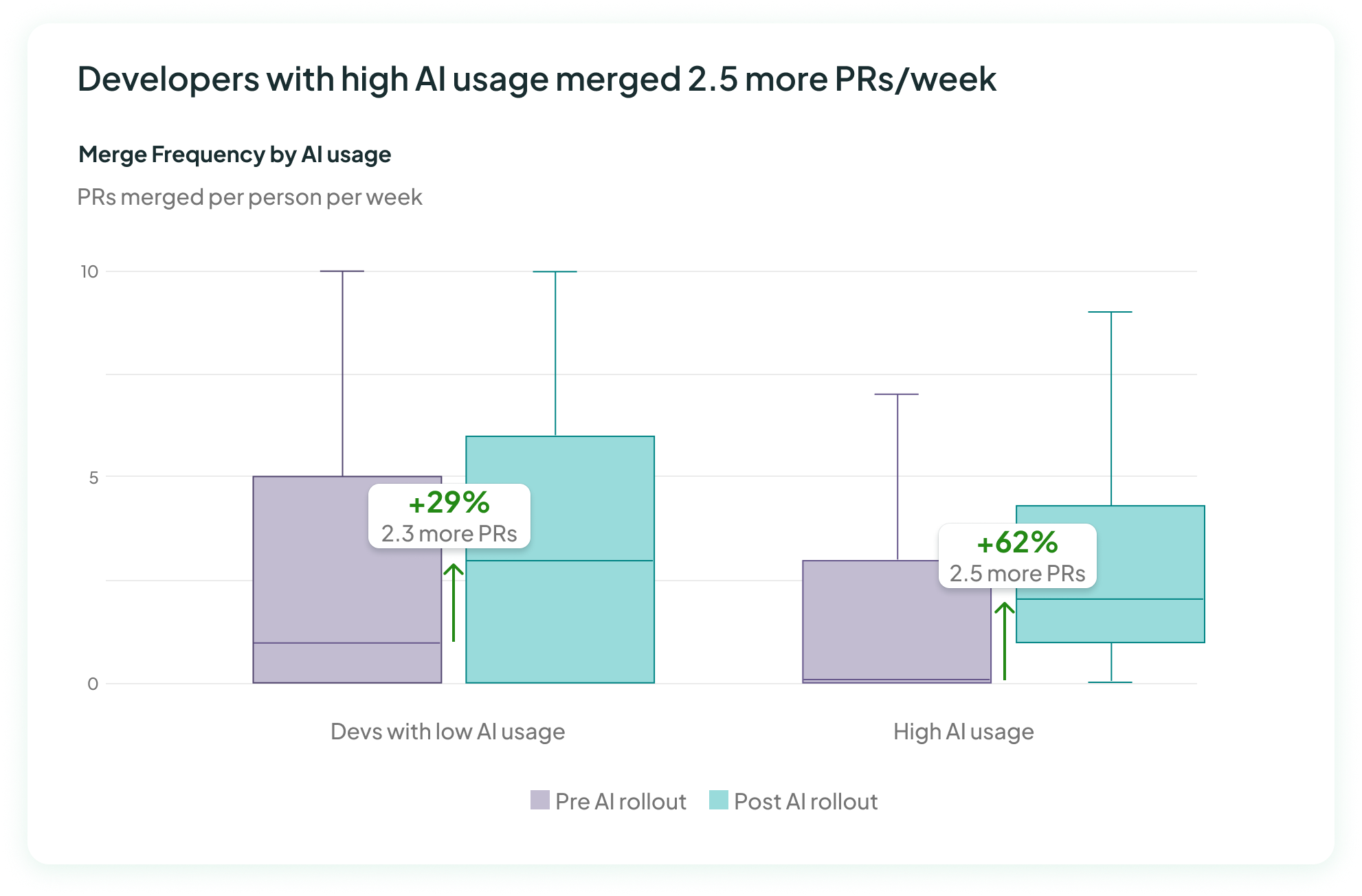

The fact that high AI adopters were merging significantly more PRs than others using AI implies that AI did have a net positive impact on PRs merged, above and beyond changes coming from other factors.

Multitudes research backs this up – across the board, there’s typically a 27% increase in PRs merged when teams adopt AI.

This metric for onboarding speed comes from Spotify – time to 10th PR merged shows how long it takes people to be truly up and running in the codebase. At Eucalyptus, they saw a 10.5% decrease in onboarding time for people who started before AI tooling was available versus those who started after.

The Eucalyptus team wanted leading indicators of how AI was impacting codebase quality; they weren't going to wait for an AI-caused incident to see the impacxt on MTTR.

To that end, they looked at 2 leading indicators from Multitudes for whether AI could be causing quality issues:

The initial metrics on these for Eucalyptus were mixed but promising:

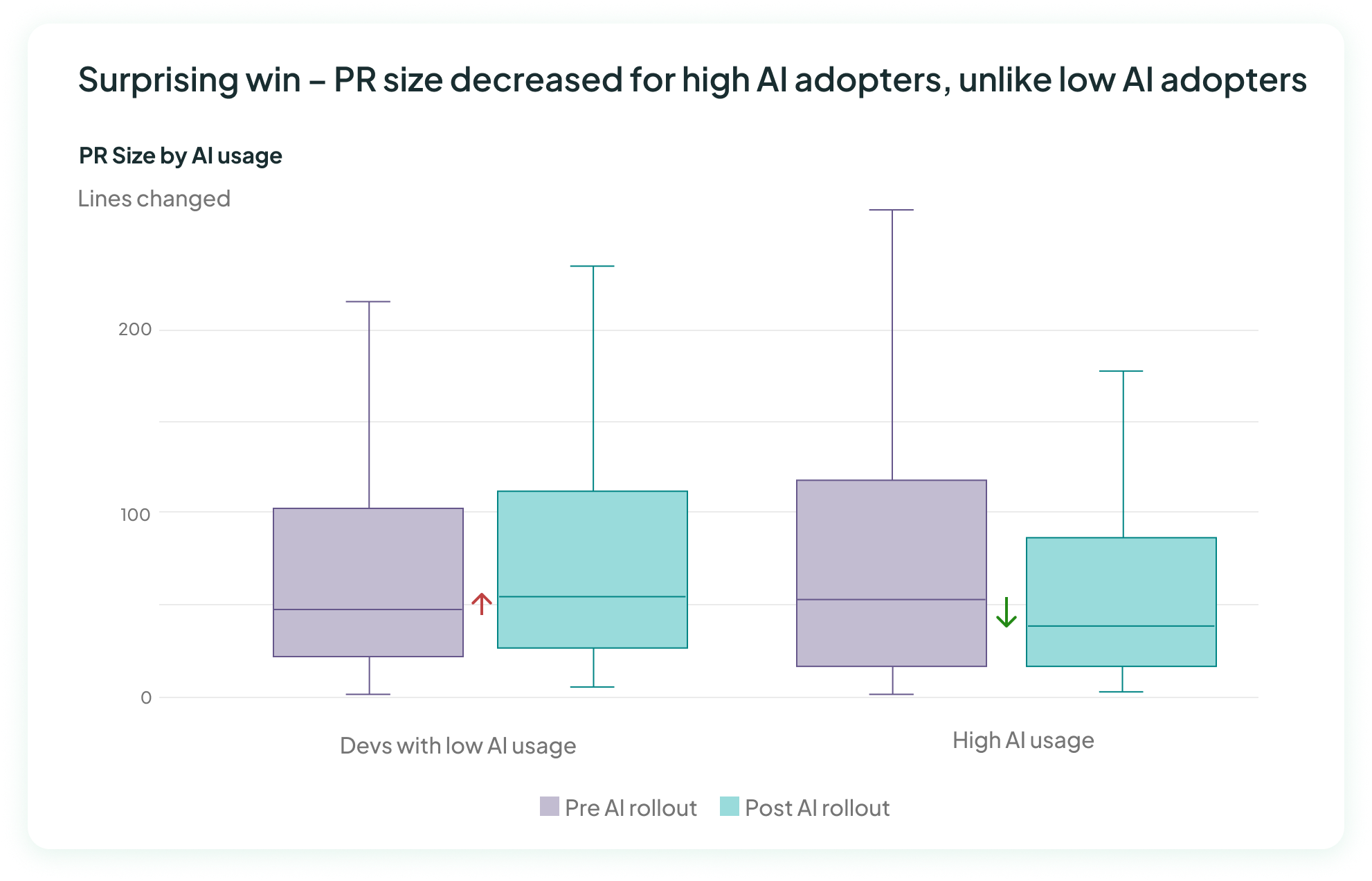

Here, the Euc team had an amazing win – PR size actually decreased for the high AI users! The question here was: How could they sustain this early sign of progress even as they increased their AI usage?

This metric was interesting – while there was no change to the number of reviews that low AI users were getting, there was a decrease in the number of reviews going to high AI users. Especially given the increase in PRs merged, this was especially surprising. A key question going forward was how to make sure that people using AI were still getting enough reviews.

From those insights, Bronwyn and Adrian had two questions to explore:

Conversations with their engineers showed several things that had gone well in the rollout:

Their developers cited several norms they were thinking of when they used AI:

The big example here was that when the platform team rolled out AI tooling, Adrian and others shared that they wouldn’t review PRs if they were too long – so developers across the org knew it was important to keep PR size low even while using AI.

With clear culture norms and goals with AI, Eucalyptus developers found practices that worked – and which even engaged AI to help them achieve the end goal of no AI slop:

These insights meant that the platform team knew what practices to double down on as they encouraged more AI usage across the team. Adrian also put together Cursor rules that people can add locally, to help ensure that AI-written PRs follow their style guide.

Over the following month, the continued AI push meant that AI adoption increased 26% across the engineering team (based on DAU) – so it was another good opportunity to check in on the impact of AI with the increased usage.

The results were resoundingly positive:

They saw even bigger improvements in Merge Frequency, with it increasing by 161% for high AI adopters compared to the “before AI” period. That’s 100 percentage points more than the 62% increase that low AI adopters saw. That increase happened even as the onboarding benefits continued, with time to 10th PR staying low.

PR sizes remained low for high AI adopters – overall, they actually had an 8.5% decrease in PR size. This is an even bigger win given that the low AI adopters had an increase in their PR size over this period, because this can indicate that broader org pressures would have pushed PR sizes higher even separate from AI. Thanks to the improvements with the high AI adopters, overall organizational PR size remained constant.

Maintaining a consistent PR size while rolling out AI is a huge win – it means Adrian and Bronwyn are succeeding in preventing AI slop while getting benefits from AI.

The other win was on the reviews side. Their rollout strategies worked, with the number of reviews going up for the high AI users, meaning that reviews received were now at pre-AI levels.

“It was great to have real-time data to compare and see trends over time. In particular, it helped us to be able to do a deep dive into AI – that meant we could do the rollout quickly, knowing we would have leading indicators of AI’s impact. Those leading indicators gave us time to adjust as needed.”

- Adrian Andreacchio, Platform Engineering Lead

Adrian and Bronwyn have even more reason to double down on their strong code review culture at Eucalyptus. Over time, they’ll be able to validate the quality indicators (like PR size and human reviews) with more lagging indicators, like Change Failure Rate and Mean Time to Recovery.

As they try new AI interventions – like Adrian’s Cursor rules that he’s putting in a centralized repo – they can measure the impact of each one in Multitudes’s AI impact feature and see how it impacts AI adoption, productivity, and of course, code quality.